A Perfect Storm: The Record of a Revolution

Footnote

1 The analogy to political revolutions was presented earlier by Bobbie Spellman (e.g., https://morepops.wordpress.com/2013/05/27/research-revolution-2-0-the-current-crisis-how-technology-got-us-into-this-mess-and-how-technology-will-help-us-out/).

The First Fundamental Challenge of Empirical Research: Separating Postdiction from Prediction

The first fundamental challenge deals with the key distinction between research that is exploratory (i.e., hypothesis-generating) versus confirmatory (i.e., hypothesis-testing). In his book “Methodology”, de Groot (1969, p. 52) states: “It is of the utmost importance at all times to maintain a clear distinction between exploration and hypothesis testing. The scientific significance of results will to a large extent depend on the question whether the hypotheses involved had indeed been antecedently formulated, and could therefore be tested against genuinely new materials. Alternatively, they would, entirely or in part, have to be designated as ad hoc hypotheses (...)”. Similarly, Barber (1976, p. 20) warns us that: "When the investigator has not planned the data analysis beforehand, he may find it difficult to avoid the pitfall of focusing only on the data which look promising (or which meet his expectations or desires) while neglecting data which do not seem 'right' (which are incongruous with his assumptions, desires, or expectations)."

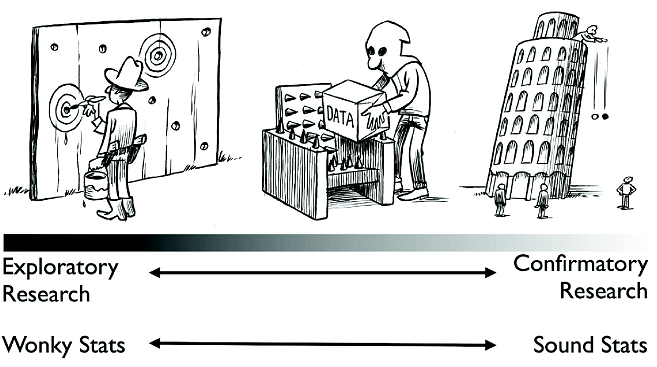

The core of the problem is that hypothesis testing requires predictions; as the word implies, these are statements about reality that do not have the benefit of hindsight. Include the benefit of hindsight, however, and predictions turn in to postdictions. Postdictions are often remarkably accurate, but their accuracy is hardly surprising. Figure 1 illustrates the continuum from exploratory to confirmatory research and highlights the need to cleanly separate the two. Note that the Texas sharpshooter (Figure 1, left end of continuum) appears to have remarkably good aim, but only because he uses postdiction instead of prediction. True prediction requires complete transparency, including a preregistered plan of analysis (Figure1, right end of continuum, where a clear prediction is tested under public scrunity). Most research today uses an unknown mix of prediction and postdiction. As Figure 1 highlights, statistical methods for hypothesis testing are only valid for research that is strictly confirmatory (e.g., de Groot, 1956/2014; Goldacre, 2008).

Figure 1. A continuum of experimental exploration and the corresponding continuum of statistical wonkiness (Wagenmakers et al., 2012). “On the far left of the continuum, researchers find their hypothesis in the data by post hoc theorizing, and the corresponding statistics are ‘wonky’, dramatically overestimating the evidence for the hypothesis. On the far right of the continuum, researchers preregister their studies such that data collection and data analyses leave no room whatsoever for exploration; the corresponding statistics are ‘sound’ in the sense that they are used for their intended purpose. Much empirical research operates somewhere in between these two extremes, although for any specific study the exact location may be impossible to determine. In the grey area of exploration, data are tortured to some extent, and the corresponding statistics are somewhat wonky.” Figure available at http://www.flickr.com/photos/23868780@N00/7374874302, courtesy of Dirk-Jan Hoek, under CC license https://creativecommo ns.org/licenses/by/2.0/.

Figure 1. A continuum of experimental exploration and the corresponding continuum of statistical wonkiness (Wagenmakers et al., 2012). “On the far left of the continuum, researchers find their hypothesis in the data by post hoc theorizing, and the corresponding statistics are ‘wonky’, dramatically overestimating the evidence for the hypothesis. On the far right of the continuum, researchers preregister their studies such that data collection and data analyses leave no room whatsoever for exploration; the corresponding statistics are ‘sound’ in the sense that they are used for their intended purpose. Much empirical research operates somewhere in between these two extremes, although for any specific study the exact location may be impossible to determine. In the grey area of exploration, data are tortured to some extent, and the corresponding statistics are somewhat wonky.” Figure available at http://www.flickr.com/photos/23868780@N00/7374874302, courtesy of Dirk-Jan Hoek, under CC license https://creativecommo ns.org/licenses/by/2.0/.

It should be stressed that the problem is not with exploratory research itself. Rather, the problem is with dishonesty, that is, pretending that exploratory research was instead confirmatory.

The Second Fundamental Challenge of Empirical Research: The Violent Bias of the P-Value

Across the empirical sciences, researcher rely on the infamous p-value to discrimate the signal from the noise. The p-value quantifies the extremeness or unusualness of the obtained results, given that the null hypothesis is true and no difference is present. Commonly, researchers "reject the null hypothesis" when p<.05, that is, when chance alone is deemed an insufficient explanation for the results.