A Perfect Storm: The Record of a Revolution

Over the course of decades, statisticians and philosophers of science have repeatedly pointed out that p-values overestimate the evidence against the null hypothesis. That is, p-values suggests that effects are present when the data only offer limited or no support for such a claim (Nuzzo, 2014, and references therein). Ward Edwards (1965, p. 400) stated that "Classical significance tests are violently biased against the null hypothesis". It comes as no surprise that replicability suffers when the most popular method for testing hypotheses is violently biased against the null hypothesis.

A mathematical analysis of why p-values are violently biased against the null hypothesis takes us too far afield, but the intuition is as follows: the p-value only considers the extremeness of the data under the null hypothesis of no difference, but it ignores the extremeness of the data under the alternative hypothesis. Hence, data can be extreme or unusual under the null hypothesis, but if these data are even more extreme under the alternative hypothesis then it is nevertheless imprudent to "reject the null". As argued by Berkson (1938, p. 531) : "My view is that there is never any valid reason for rejection of the null hypothesis except on the willingness to embrace an alternative one. No matter how rare an experience is under a null hypothesis, this does not warrant logically, and in practice we do not allow it, to reject the null hypothesis if, for any reasons, no alternative hypothesis is credible."

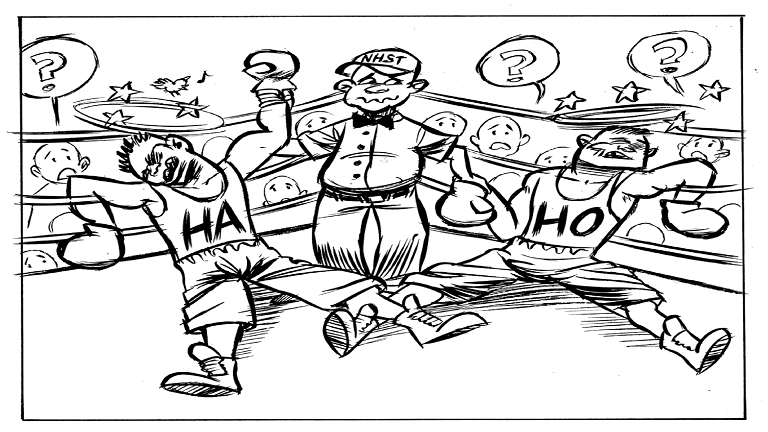

This situation is illustrated in Figure 2. The lesson is that evidence is a concept that is relative rather than absolute; when we wish to grade the decisiveness of the evidence provided by the data, this requires that we consider both a null hypothesis of no difference as well as a properly specified alternative hypothesis. By only focusing on one side of the coin the p-value biases the conclusion away from the null hypothesis, effectively encouraging the pollution of the

field with results whose level of evidence is not worth more than a bare mention (e.g., Wetzels et al., 2011).

Figure 2. A boxing analogy of the p-value (Wagenmakers et al., in press). By considering only the dismal state of boxer H0 (i.e., the null hypothesis), the referee who uses Null-Hypothesis Significance Testing makes a decision that puzzles the public. Figure available at http://www.flickr.com/photos/23868780@N00/12559689854/, courtesy of Dirk-Jan Hoek, under CC license https://creativecommons.org/licenses/by/2.0/.

Figure 2. A boxing analogy of the p-value (Wagenmakers et al., in press). By considering only the dismal state of boxer H0 (i.e., the null hypothesis), the referee who uses Null-Hypothesis Significance Testing makes a decision that puzzles the public. Figure available at http://www.flickr.com/photos/23868780@N00/12559689854/, courtesy of Dirk-Jan Hoek, under CC license https://creativecommons.org/licenses/by/2.0/.

The Future of the Revolution: A Positive Outlook

In short order, the shock effect from well-documented cases of fraud, fudging, and non-replicability has created an entire movement of psychologists who seek to improve the openness and reproducibility of findings in psychology (e.g., Pashler & Wagenmakers, 2012). Special mention is due to the Center for Open Science (http://centerforopenscience.org/); spearheaded by Brian Nosek, this center develops initiatives to promote openness, data sharing, and preregistration. Another initiative is http://psychfiledrawer.org/, a repository where researchers can upload results that would otherwise have been forgotten. In addition, Chris Chambers has argued for the importance of distinguishing between prediction and postdiction by means of Registered Reports; these reports are based on a two-step review process that includes, in the first step, review of a registered analysis plan prior to data collection. This plan identifies, in advance, what hypotheses will be tested and how (Chambers, 2013; see also https://osf.io/8mpji/wiki/home/). Thanks in large part to his efforts, many journals have now embraced this new format. With respect to the violent bias of p-values, Jeff Rouder, Richard Morey, and colleagues have developed a series of Bayesian hypothesis tests that allow a more honest assessment of the evidence that the data provide for and against the null hypothesis (Rouder et al., 2012; see also www.jasp-stats.org). The reproducibility frenzy also includes the development of new methodological tools; proposals for different standards for reporting, analysis, and data sharing; encouragement to share data by default (e.g., http://agendaforopenresearch.org/); and a push toward experiments with high power.