How perception and action emerge: Stories of a puzzling mind

Editorial assistants: Jana Dreston, Stella Wernicke

Note: An earlier version of this article has been published in the German version of In-Mind.

Nothing seems as simple to us as perceiving the world around us. But in fact, the way our brain processes sensory input is astonishingly complex. It first breaks down our sensory impressions into small individual features. These individual features must then be put together like a jigsaw puzzle. But how does our brain "know" which features belong to which object, person, or environmental event?

In every moment of our lives, we see, hear, smell, and feel a multitude of things. At the same time, we engage in a variety of activities, such as driving a car, listening to the radio, or talking to our fellow passengers. But what is actually going on in our mind when we perceive events surrounding us and carry out goal-oriented actions? This fundamental question of cognitive psychology has led to astonishing results about how the human brain works.

The puzzle in your mind: How do perceptual impressions arise?

Nothing seems to be as simple and straightforward as perceiving our environment: just open your eyes, and you see things in your surroundings, such as trees, houses, or streets. At the same time, you hear sounds such as people talking or laughing, traffic, birdsong, and so on. Taking a closer look at how such sensory input is processed in the brain reveals a surprisingly complex architecture, though. This complexity becomes especially evident by the fact that our brain dissects incoming information into individual, independent features.

For visual sensations, this works as follows: When we walk through a park, our brain breaks down the impressions into colors, orientation, brightness, direction of movement, and so on. This means that, before we consciously perceive a flowering tree, our brain has already done a lot of work: It has cataloged the existing green, brown, and white colors, broken down the shape of the tree into a number of small edges and registered the presence of movement, for example, when the wind blows through the leaves. All of these features are processed independently of one another [1]. The result is a large number of extracted features that now have to be put together like a jigsaw puzzle. But which pieces belong together? In other words, How does the brain “know” which features belong to which object, to which person, or, more generally, to which visual event?

Lapses of attention trigger erroneous perceptions: The case of illusory conjunctions

This seems like a difficult task – but our brain uses a trick to solve this puzzle: it assumes that all features that are activated at the same time in the same place probably belong to the same object [1]. In the tree example, many brown features and vertical edges are simultaneously activated in the lower part of our visual

field. These form the tree trunk. Various green, horizontal, and diagonal features in many small locations in the upper

field of vision are connected to form leaves, and so on. If you pay attention to the location, you can correctly connect different features of the same object with one another; this is the moment when we consciously perceive things like the trunk, leaves, branches, etc. as objects.

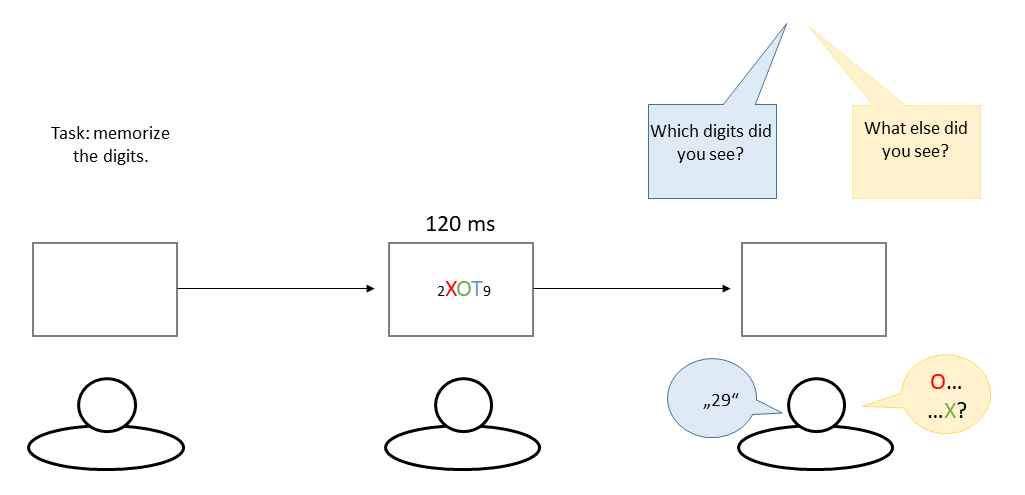

The process of initially extracting and subsequently integrating individual features into objects can be demonstrated in laboratory studies [2]. For instance, participants might work at a computer and are shown colored letters such as a green X and a red O. These letters are presented for a very short time (about 120 milliseconds, i.e., just over a tenth of a second). Participants are distracted just before each

stimulus appears so that their attention is no longer in the same place where the letters appear. As a result, participants often report so-called illusory conjunctions. For example, they believed they had seen a green O and a red X, although these stimuli were never actually visible on the screen. Note that the participants perceived the correct features. However, because they were distracted, their brain put them together incorrectly [2]. Hence, attention is central to integrating individual features into

holistic objects and solving the puzzle of the extracted features. Experimental setup of Treisman and Schmidt (1982). Participants had to report the flanking digits, which drew their attention away from the letters. The stimulus array (consisting of digits and letters) was only briefly presented. Subsequently, participants had to report which digits and letters they saw. Participants often report illusory conjunctions between the color- and form-features of unattended letters.

Experimental setup of Treisman and Schmidt (1982). Participants had to report the flanking digits, which drew their attention away from the letters. The stimulus array (consisting of digits and letters) was only briefly presented. Subsequently, participants had to report which digits and letters they saw. Participants often report illusory conjunctions between the color- and form-features of unattended letters.

What applies to perception also applies to action planning

Cognitive psychologists often refer to the integration of individual features as binding, and the resulting connections are consequently named bindings. Through bindings, features are integrated into a perceptual impression (e.g., an object). Bindings are temporary connections among features that are stored in episodic memory. Bindings can connect features within a sensory channel, such as color (red), shape (round), and size (small), to integrated objects (e.g., a tomato). Bindings can also connect features from different sensory channels. Think of the blue light of a fire engine with the accompanying “nee-naw” siren sound: these are bindings between visual and auditory features.

What is true for perception is also true for action (for an introductory In-Mind article, see [3]). For example, if we want to reach for a flower by extending our arm, the corresponding movement is composed of numerous independent features. Examples include the coordinates of the target, the speed, and force of the movement, etc. There is compelling evidence that action plans arise from the binding of initially unrelated movement features [4].

Bindings between perception and action

How do we manage to move purposefully through our environment? How do we make sure that we are actually reaching for the flower we want to pick and do not grab the empty beer bottle lying next to it instead? Can we bind our actions (reaching) with sensory impressions (flower)? This is indeed the case, as bindings between object- and action-features can also occur [5]. To explore these bindings in the laboratory, simple stimuli with only a few features are used. Instead of reaching for objects, participants usually complete a task on a computer. Often, all that is required, is a key press on a computer keyboard. This simplification allows researchers to examine bindings between

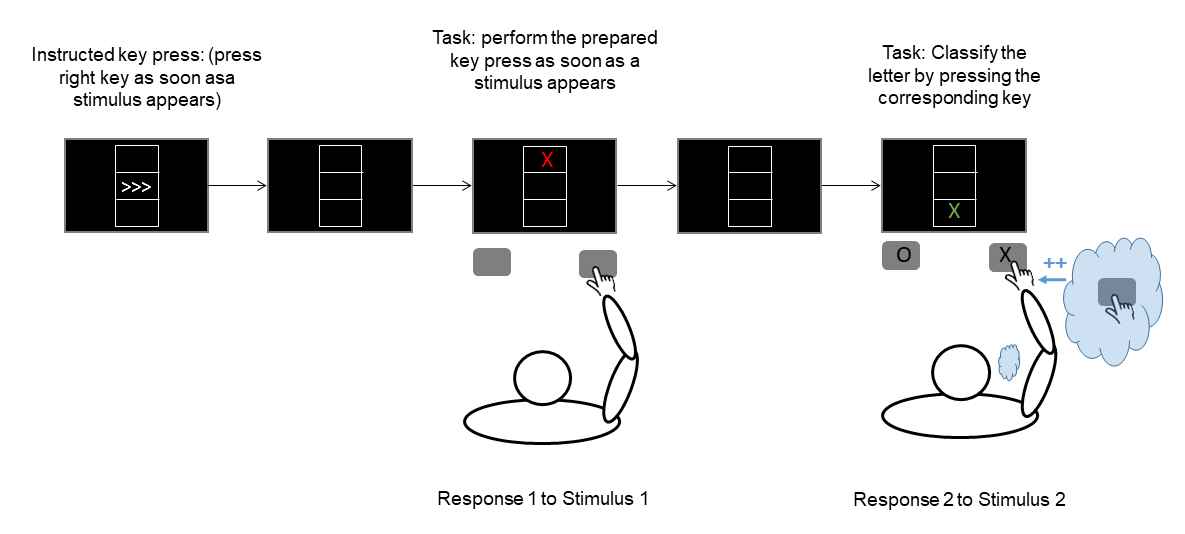

stimulus and response features. For example, participants are shown two stimuli, one after the other. These stimuli differ in certain ways: They can be an X or an O (shape), each appearing in red or green (color) at the top or bottom (position) of the screen. Participants respond to each of the two stimuli by pressing a key (left or right). In such an experiment, (a) some or all features (shape, color, position) can be repeated or are changed between the first and second

stimulus presentation. In addition, (b) the response required by the two stimuli may either repeat or change. It is now interesting to see how quickly and correctly the second of the two responses is made, depending on whether the features of the stimuli and the responses are repeated. Stimulus-response bindings emerge when a response (here: response 1) is executed to a given stimulus (stimulus 1). If a feature of stimulus 1 repeats later on (stimulus 2), this will retrieve the stimulus-response binding, which reactivates the formerly executed response. If the retrieved response matches the requirements of the current situation (i.e., response 2 = response 1), the second response is typically executed faster (represented by “++” in the picture).

Stimulus-response bindings emerge when a response (here: response 1) is executed to a given stimulus (stimulus 1). If a feature of stimulus 1 repeats later on (stimulus 2), this will retrieve the stimulus-response binding, which reactivates the formerly executed response. If the retrieved response matches the requirements of the current situation (i.e., response 2 = response 1), the second response is typically executed faster (represented by “++” in the picture).

The reasoning is as follows: When the first response is made, bindings are formed between the different stimulus features, but also between stimulus and response features. If a feature (such as color) is repeated in the second stimulus, the bindings of this feature to other stimulus and response features is retrieved from memory. This, in turn, influences the second response: For instance, participants respond faster when both the shape and position of the stimulus are repeated (which indicates bindings in perception). Furthermore, participants also respond faster if the two stimuli have the same shape and require the same response [6, 7]. The latter result shows that object features (such as shape, position, and color) can also be bound to action features (here: a response with the left/right key; [6]). Such bindings are called stimulus-response bindings.

Micro-memories as the basis for automatic action

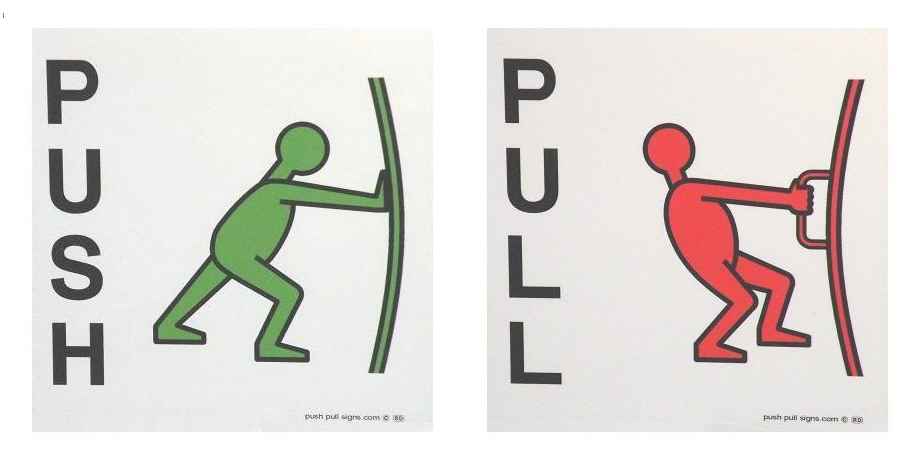

Our daily lives are full of constant connections between stimuli and responses: red lights tell us to stop; green lights signal that we can go. Door handles signal a “pull” response, the absence of door handles (as is often the case in the UK, US, or Canada) signals a “push” response. The

stimulus-response bindings just described are essentially micro-memories that can be very useful for automatically controlling our behavior. In everyday life, we often interact with contingent stimulus-response pairings. For instance, in the UK, USA, or Canada, door handles signal a “pull” response, whereas absent handles signal a “press” response.

In everyday life, we often interact with contingent stimulus-response pairings. For instance, in the UK, USA, or Canada, door handles signal a “pull” response, whereas absent handles signal a “press” response.

Let's assume that you have to go through several doors on your way to your office, and you open the first one. This creates a short-term episodic binding between the door handle and the “pull” response. Later, when one of these features is repeated (e.g., because the handle of the second door looks like the first door handle), it reactivates the other linked elements and thus retrieve the previously performed response from memory. The response is pre-activated by memory retrieval and can be carried out quickly again. The benefit is obvious: if perceiving an object (such as a door handle) is sufficient to retrieve the appropriate response (e.g., “pull”), it makes it easier for us to plan and execute the next action. This saves time and mental effort [8]: We no longer have to think about how to act because the correct response is already pre-activated and can be carried out efficiently.

However, if a different response must be made to the same

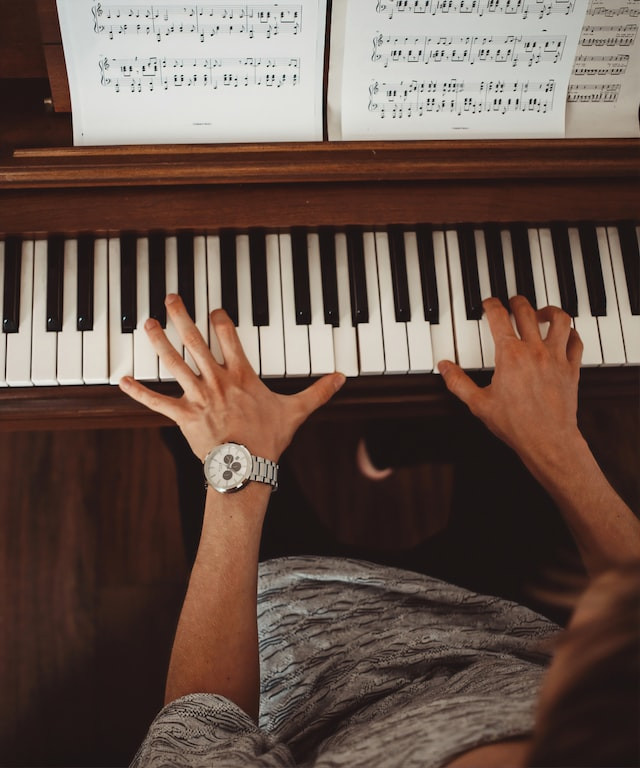

stimulus, for example, a “press” response, delays can occur because the retrieved response is not appropriate anymore. The linkage between stimuli and incorrect responses can also be found in everyday life. Musicians may perhaps have already experienced how difficult it is to correct an initial error (e.g., pressing the wrong piano key) when playing a piece again. When a certain sequence of notes is repeated, there is a tendency to repeat the same mistake. This phenomenon is elegantly explained by

binding and

retrieval: the sound of the last correctly played note is transiently bound to the subsequent (incorrect) response. When the sequence of notes is played again, the sound of the last note will retrieve the bound (incorrect) response. The result: the error occurs again and perpetuates itself. Such a situation can only be resolved by playing the sequence of notes very slowly and carefully. Once correct tone-key sequences have been produced, they can be accessed later. This is because the last stored

stimulus-response binding is the most likely one to be retrieved [9]. In addition, once the tone sequence has been practiced, making a mistake is less impactful [10]. Stimulus-response binding can account for re-occurring errors when playing the piano.

Stimulus-response binding can account for re-occurring errors when playing the piano.

Learning action sequences: bindings between different actions

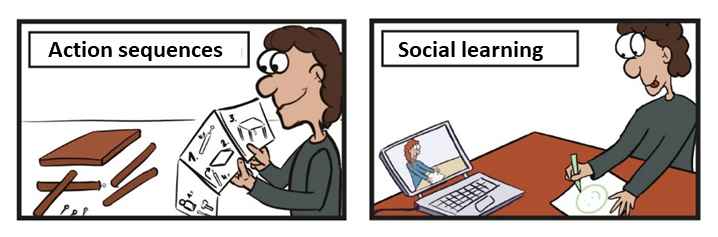

Most laboratory experiments examine bindings between perceived stimuli and executed responses that occur simultaneously. This may spur the impression that bindings primarily play a role in snapshots of individual situations. However, what is particularly interesting for our everyday lives is that bindings are also built between different actions in a sequence of actions [11]? For example, the action of “writing an essay” consists of several sub-actions (“writing paragraphs”), which in turn can be broken down into smaller actions (writing individual words). Typing a single word, in turn, consists of several keystrokes [12]. This means that, even if individual responses are made sequentially, they can be linked together. The results are then called response-response bindings. When one of these actions is repeated, it retrieves other responses associated with it, making it possible to carry out the same action sequence quickly and efficiently. Response-response bindings are probably the first step in the process of learning action sequences. They allow us to drive a car, listen to the radio, and have a conversation, all seemingly without exerting much mental effort [8]. Processes of binding and retrieval promote performing action sequences (left) and social learning from observation (right).

Processes of binding and retrieval promote performing action sequences (left) and social learning from observation (right).

Learning through observation: bindings for observed actions

Do you always have to act yourself to form stimulus-response bindings? Wouldn’t it be sufficient to just observe another person's response? This is indeed the case as suggested by several everyday observations: Imagine you are taking part in a physical education class and the instructor shows how to grip a dumbbell and move it. You will find that you can do this surprisingly well, even if you have never done this exact movement with a dumbbell before. Again, stimulus-response bindings are the basis of your actions, but this time they are bindings between stimuli (the dumbbell) and a response that you have observed. The observationally acquired stimulus-response binding can then be retrieved later, for example by presenting the stimulus again [13]. In this way, the response of another person is used to control your own actions. You do not have to carry out every response yourself in order to acquire the stimulus-response binding that forms the basis of automatic behavioral control via those bindings.

Take Home Message

Information from the environment is first broken down into its features by our brain. Through bindings, these features are integrated into a coherent perceptual impression (i.e., an object). This happens not only for object features, but also for features of planned actions. Bindings can also occur between objects (stimuli) and actions (responses). These stimulus-response bindings are useful for automatic action control because they can be retrieved from memory when a stimulus repeats. This makes it easier to perform the response again. Two examples illustrate the influence of bindings on our daily actions: First, bindings can also be formed between different responses, which is likely to support the learning of actions sequences. Second, bindings can also occur between stimuli and observed actions, making it possible to learn from the experiences of others.

References

[1] Treisman, A. M., & Gelade, G. (1980). A

feature-integration theory of attention. Cognitive Psychology, 12(1), 97–136. https://doi.org/10.1016/0010-0285(80)90005-5

[2] Treisman, A., & Schmidt, H. (1982). Illusory conjunctions in the

perception of objects. Cognitive Psychology, 14(1), 107–141. https://doi.org/10.1016/0010-0285(82)90006-8

[3] Pfister, R., Janczyk, M., & Kunde, W. (2010). Los, beweg dich! - Aber wie? Ideen zur Steuerung menschlicher Handlungen. In-Mind Magazine, 4. https://de.in-mind.org/article/los-beweg-dich-aber-wie-ideen-zur-steueru...

[4] Rosenbaum, D. A. (1980). Human movement initiation: Specification of arm, direction, and extent. Journal of Experimental Psychology: General, 109(4), 444–474. https://doi.org/10.1037/0096-3445.109.4.444

[5] Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The Theory of Event Coding (TEC): A framework for

perception and action planning. Behavioral and Brain Sciences, 24(5), 849–937. https://doi.org/10.1017/S0140525X01000103

[6] Hommel, B. (1998). Event files: Evidence for automatic integration of

stimulus-response episodes. Visual Cognition, 5(1), 183–216. https://doi.org/10.1080/713756773

[7] Janczyk, M., Giesen, C.G., Moeller, B., Dignath, D., & Pfister, R. (2023). Event-coding in action and

perception: Estimating effect sizes of common experimental designs. Psychological Research, Psychological Research 87, 1012–1042. https://doi.org/10.1007/s00426-022-01705-8

[8] Logan, G. D. (1988). Toward an instance theory of automatization. Psychological Review, 95(4), 492–527. https://doi.org/10.1037/0033-295X.95.4.492

[9] Giesen, C. G., Schmidt, J. R., & Rothermund, K. (2020). The law of recency: An episodic

stimulus-response

retrieval account of

habit acquisition. Frontiers in Psychology (10), Article 2927. https://doi.org/10.3389/fpsyg.2019.02927

[10] Foerster, A., Moeller, B., Huffman, G., Kunde, W., Frings, C., & Pfister, R. (2021). The human cognitive system corrects traces of error commission on the fly. Journal of Experimental Psychology: General, 151(6), 1419-1432. https://doi.org/10.1037/xge0001139

[11] Moeller, B., & Frings, C. (2019). From simple to complex actions: Response–response bindings as a new approach to action sequences. Journal of Experimental Psychology: General, 148(1), 174.

[12] Yamaguchi, M., & Logan, G. D. (2014). Pushing typists back on the learning curve: Revealing chunking in skilled typewriting. Journal of Experimental Psychology: Human

Perception and Performance, 40(2), 592-612.

[13] Giesen, C., Herrmann, J., & Rothermund, K. (2014). Copying competitors? Interdependency modulates

stimulus-based

retrieval of observed responses. Journal of Experimental Psychology: Human

Perception and Performance, 40(5), 1978–1991. https://doi.org/10.1037/a0037614

Images

Image 1, 2, & 3: Created by Carina G. Giesen.

Image 4: (Photo by Vitae London on Unsplash). https://unsplash.com/photos/o3xm7jAUp2I

Image 5: Created by Stephanie Blasl, Department of General Psychology and Research Methods, Trier University, Trier, Germany.

Statements

This article is based on a German In-Mind article (Giesen, C.G., Janczyk, M., Dignath, D., Pfister, R., & Moeller, B. (2023). Das Puzzle im Kopf: Wie Wahrnehmungseindrücke und Handlungspläne entstehen. In-Mind Magazin. https://de.in-mind.org/article/das-puzzle-im-kopf-wie-wahrnehmungseindruecke-und-handlungsplaene-entstehen) that was initially translated to English via Google Translate, further edited, and then finalized by Carina G. Giesen and edited by all other authors.