Diagnosed by AI – What our social media behavior reveals about our mental health

Editorial Assistants: Lorenz Grolig and Elena Benini.

Note: An earlier version of this article has been published in the German version of In-Mind.

When mental illnesses are detected early and treated promptly, people affected tend to suffer less and have a better chance of recovery. Until now, identifying signs of mental illness in everyday life has required human expertise. Researchers are developing new approaches to automatically detect mental illness by using information from social media. But how well does this work, and what risks might this approach involve?

Maria is a successful influencer who reaches thousands of followers every day with her videos. Although she always shows her cheerful side on social media, she has been feeling increasingly empty and drained lately. Checking her messages from followers, she reads a notification from the platform itself: An analysis software has detected changes in her behavior over the past few weeks that indicate depression. Maria is stunned. How could the software know about her mental health? Should she take this message seriously?

Whether a dystopia of surveillance or a utopia of rapid detection of mental illness, this fictional story may still sound futuristic to most people. Yet many scientists are already conducting research into the detection of mental illness on social media. With nearly one billion people worldwide suffering from mental illness per year [1], systems developed in this field hold great potential to detect psychological distress and thus pave the way for early treatment. At the same time, personal and health data must be carefully protected, and the application of algorithms may not lead to user stigmatization or discrimination. This article provides an overview of how artificial intelligence can be used to detect mental illness on social media, how well these systems currently perform, and what ethical challenges they raise.

How do machines learn?

To grasp how specific systems for detecting mental illnesses work, it is helpful to first understand how machines “learn.” Broadly speaking, there are two main approaches: rule-based systems and methods based on artificial intelligence.

Rule-based systems

Rule-based systems represent a traditional approach to automated data processing. For such systems, developers define specific rules for how the program processes information. For example, they might create lists of words that indicate depression: “emptiness,” “listlessness,” “darkness,” etc. They then define rules specifying which word combinations must appear for depression to be suspected. Although rule-based methods are still used in many fields, artificial intelligence often produces much better results. In these cases, machines perform tasks that are typically associated with intelligent beings, especially humans. In the following, we will take a closer look at machine learning, one form of artificial intelligence.

Machine learning

In machine learning, a system analyzes large amounts of data and develops its own rules for decision-making. Generally, the more data available, the better the system becomes at recognizing patterns that lead to correct results. Data is processed in two phases: In the training phase, the system is shown data samples that have already been classified by humans, e.g., A) depression is present, B) no depression. The system employs statistical methods to search for patterns that are typical of a particular mental illness and that enable correct classification [2]. In the testing phase, the system is presented with new data samples whose correct classifications are known to humans but not revealed to the system. The system is then evaluated on how often it produces the correct result; that is, how often the system’s classification matches the human classification. Through this process, the system develops rules that estimate how likely each possible classification is for a given data sample. However, it is still humans who decide how the system searches for these rules: Developers try out different methods and ultimately use those that perform most reliably.

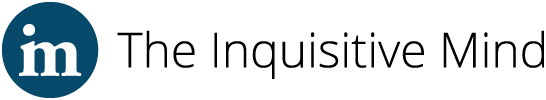

Fig. 1. Artificial neural networks for detecting mental illness on social media. The top half shows a shallow neural network with one hidden layer, and the bottom half shows a deep neural network with three hidden layers.

Fig. 1. Artificial neural networks for detecting mental illness on social media. The top half shows a shallow neural network with one hidden layer, and the bottom half shows a deep neural network with three hidden layers.

Deep learning

Deep learning is a specific form of machine learning that is increasingly used to detect mental illness [2]. As with conventional machine learning, the system searches for patterns in large amounts of data and develops rules according to which new data can then be assessed. Deep learning is characterized by so-called artificial neural networks. A shallow

neural network consists of three layers (see upper half of Figure 1). The first layer of neurons represents the features of training examples in mathematical form. The middle layer processes the values of the first layer in a way that its neurons contain large values (activations) when the example contains an important pattern (i.e., relevant information). The final layer contains as many neurons as there are outcome categories, for example, three neurons representing “no

depression,” “mild

depression,” and “severe

depression.” The system’s decision corresponds to the

neuron in the final layer that has the highest activation value. A deep

neural network has several middle layers (see lower half of Figure 1). Processing therefore takes place in multiple steps, which allows the model to capture more complex relationships and achieve higher performance [3].

Figure 2. Comparing yourself to others on social media can be mentally stressful.

Figure 2. Comparing yourself to others on social media can be mentally stressful.

How well can mental illness be detected on social media?

How well a system can recognize symptoms of mental illness depends on the specific system, the type of mental illness, and the information available about the individual. Below are examples of systems developed to detect common mental illnesses.

Depression

Depression is a mental illness characterized by a persistently low mood, fatigue, or a loss of interest in everyday activities lasting at least two weeks. Other possible symptoms are difficulty concentrating, feelings of worthlessness, and changes in appetite or sleep [4]. Depression is the most common mental illness among adults worldwide [1].

A deep learning system called EnsemBERT was developed to investigate signs of depression in Reddit users [5]. Reddit is a social platform where users can take part in public discussions on various topics. To develop the system, public Reddit posts from 100 users were analyzed. These users had also filled in the BDI-II [6], a well-established diagnostic questionnaire used to assess the severity of depressive symptoms. In the BDI-II, patients do not simply answer “yes” or “no” but indicate the degree to which they agree with statements such as “I am sad” or “I have difficulty concentrating.” The system specifically searched for Reddit posts whose content resembled statements from the BDI-II. Based on the users’ posts, the system then estimated how individuals would respond to the BDI-II items. During the testing phase, EnsemBERT achieved higher accuracy than many previous systems: On average, its estimates for individual statements deviated by less than one point from the participants’ actual score in the questionnaire (out of four possible grades). Furthermore, the system correctly assessed the questionnaire's depression rating as “minimal,” “mild,” “moderate,” or “severe” in 7 out of 10 cases [5]. A particularly innovative aspect of the system is that it not only estimates whether depression is present, but also identifies specific symptoms such as sleep problems or hopelessness. However, the model was trained on a relatively small dataset, and further research with larger samples is needed. In addition, the system’s assessments are not yet reliable enough for practical clinical use.

Eating disorders

Anorexia nervosa is the most common eating disorder. It is characterized by severe weight loss and a dangerously low body weight. People with anorexia often also have a distorted view of their own body [4].

X (formerly known as Twitter) is a social media platform where users can post short text messages publicly. To distinguish between users with and without eating disorders, a machine learning system called ED-Filter was developed and trained on data from 37,405 posts on X. The system first filters the posts for specific words, sentence patterns, and combinations of topics that could contain information about the person's mental health. For example, the system filters statements such as “I hate my body.” The filtered information is then analyzed to indicate whether an eating disorder might be present. The system was able to correctly assess whether eating-disordered behavior was present in about 8 out of 10 cases. Users with a self-reported eating disorder differed from other users primarily in that they used more emotional, body-related, and control-oriented language [7]. It can be positively emphasized that ED-Filter specifically selects interpretable characteristics, thus being transparent about the basis for the system’s assessment. However, the data selection in this study was very limited: Only individuals who wrote publicly about their diagnosis were considered, and the self-reported diagnoses were not clinically verified.

We have seen above that artificial intelligence can, in principle, be used to estimate the mental health of social media users. However, such systems are typically developed for only one or a few mental illnesses and for one specific social media platform [2]. Despite its great potential, automatically detecting mental illness is controversial, and many challenges still need to be solved before it can be used reliably [2]. We will look at these challenges in the next section.

Figure 3. Communication happens both in person and online.

Figure 3. Communication happens both in person and online.

What are the challenges involved in automatically detecting mental illness?

Data and ethics

Machine learning and deep learning require large amounts of data. The systems identify patterns that distinguish between people with and without a particular mental illness. Usually, a very large amount of data is needed to identify characteristics that are typical of mental illness [2,8]. At the same time, mental health is a highly sensitive topic in which personal data must be given special protection. This is why binding ethical standards for the use of data in research and the health sector are essential [9,10]. For example, in the European Union, the Digital Services Act has required very large online platforms with more than 45 million monthly active users to grant certain vetted researchers access to selected data, such as public user profiles, since 2024. Data from private accounts or content that is not publicly accessible may only be processed in very specific cases – for example, when a user has given their explicit consent [11,12]. However, regulations vary strongly between regions. China has a very strict state-driven regulation of mental-health data under the so-called Personal Information Protection Law [13], while India makes data easier to access for researchers and offers rather weak structural protection of mental-health-related data under the Digital Personal Data Protection Act [14]. In the US, there is no single national comprehensive data protection law, but rather a patchwork of federal and state laws.

Accuracy and robustness

The use of machine-learning systems (for example, for providing feedback to users) is only justified if the systems are reliable. Reliability can be divided into two aspects. First: How accurate are the systems’ results? In other words, are people with mental illness actually identified as such? Are people without mental illness correctly classified as healthy? Second: How robust are the system’s results? That is, do the classifications remain accurate for strongly diverse data? In recent years, systems for detecting mental illness using information from social media platforms have become more powerful. However, most systems work well only when they are asked to classify messages and individuals that are similar to the data used to develop them [2]. Furthermore, the classification accuracy of the systems depends on the age, gender, and ethnicity of the users. For example, women were more likely than men to be incorrectly classified as having an eating disorder [15], and depression was less accurately detected in Black Americans than in White Americans [16].

Interpretability and acceptance

To achieve societal acceptance for the machine detection of mental illness on social media, the systems’ decisions must be interpretable – in other words, people should be able to understand how the system arrived at a particular decision. The systems should only be used in practice when it is clear that they rely on characteristics that are genuinely relevant [2,17]. However, it is still unclear how most systems arrive at their conclusions about a person's mental health [3]. This is because the patterns and rules that the systems find are mathematical objects such as matrices. They describe complex interactions between characteristics and are generally not understandable to humans. Numerous scientists are researching how the decision-making processes of existing models can be better understood and how new models can be constructed in a more transparent way.

Collaboration between different fields

Research into the machine detection of mental illness takes place at the interface of several scientific disciplines. This includes, for example, computer science, which focuses on the machine processing of human language, and psychology, which studies human experience and behavior. If only one group of experts works on this kind of research, important issues can be overlooked. Computer scientists may not pay enough attention to psychological definitions of mental illnesses and their symptoms. Psychologists may not be familiar with machine learning, which can lead to biased results [8]. This is why we need research teams in which experts from different fields work together. This helps ensure that psychological diagnostic standards are followed, that individuals in the comparison group do not have a mental illness, and that the systems provide reliable and fair results.

Figure 4. On social media, we can be constantly judged by others.

Figure 4. On social media, we can be constantly judged by others.

Are machines on their way to replacing psychologists?

Mental illnesses are diagnosed according to established quality standards. Psychotherapists use standardized questionnaires and conduct clinical interviews, taking into account individual factors such as life history and body language. Artificial intelligence cannot yet meet these standards satisfactorily, but it might help identify signs of mental illness on social media platforms and point these out to users [18]. Psychotherapists could then examine whether a mental illness is actually present. This would make it possible to intervene at an early stage and support individuals in their recovery [10]. However, it should be noted that not everyone uses social media, and people differ greatly in how much they reveal about themselves online [18]. It is also unclear to what extent algorithms can judge the reliability of information they process. Artificial intelligence could therefore support therapists in diagnosing mental illness, but is not expected to replace them in the foreseeable future.

What advice can we thus give Maria from the fictional story at the beginning of this article? Maria should know that the assessment provided by the analysis software is not the same as a diagnosis by a psychotherapist. At the same time, we now know that artificial intelligence, when well trained and supplied with reliable data, can offer a helpful indication of a person’s mental health. We could therefore recommend that Maria visit a psychotherapy practice or outpatient clinic. This would allow her to receive a clear diagnosis of whether she has a mental illness – and, if necessary, seek professional support.

Bibliography

[1] World Health Organization, Mental Health Report 2022, 2023. [Online]. Available: https://www.who.int/teams/mental-health-and-substance-use/world-mental-h...

[2] T. Zhang, A. M. Schoene, S. Ji, and S. Ananiadou, “Natural language processing applied to mental illness detection: a narrative review,” npj Digital Medicine, vol. 5, no. 1, 2022, doi: 10.1038/s41746-022-00589-7.

[3] C. Su, Z. Xu, J. Pathak, and F. Wang, “Deep learning in mental health outcome research: a scoping review,” Translational Psychiatry, vol. 10, no. 1, 2020, doi: 10.1038/s41398-020-0780-3.

[4] World Health Organization, International Classification of Diseases for Mortality and Morbidity Statistics, 11th ed., 2019. [Online]. Available: https://icd.who.int/

[5] F. Ravenda et al., “Transforming

social media text into predictive tools for

depression through AI: A test-case study on the Beck

Depression Inventory-II,” PLOS Digital Health, vol. 4, no. 6, p. e0000848, 2025, doi: 10.1371/journal.pdig.0000848.

[6] A. T. Beck, R. A. Steer, and G. K. Brown, Manual for the Beck

Depression Inventory-II. San Antonio, TX: Psychological Corporation, 1996.

[7] M. Naseriparsa et al., “ED-Filter: dynamic

feature filtering for

eating disorder classification,” Artificial Intelligence Review, vol. 58, p. 237, 2025, doi: 10.1007/s10462-025-11244-4.

[8] S. Chancellor and M. De Choudhury, “Methods in predictive techniques for mental health status on

social media: a critical review,” npj Digital Medicine, vol. 3, no. 1, 2020, doi: 10.1038/s41746-020-0233-7.

[9] S. Golder, S. Ahmed, G. Norman, and A. Booth, “Attitudes toward the ethics of research using

social media: a systematic review,” Journal of Medical Internet Research, vol. 19, no. 6, p. e195, 2017, doi: 10.2196/jmir.7082.

[10] N. K. Iyortsuun, S.-H. Kim, M. Jhon, H.-J. Yang, and S. Pant, “A review of machine learning and deep learning approaches on mental health diagnosis,” Healthcare, vol. 11, no. 3, p. 285, 2023, doi: 10.3390/healthcare11030285.

[11] European Parliament and Council of the European Union, “Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation),” Official Journal of the European Union, L 119, pp. 1–88, 2016.

[12] European Parliament and Council of the European Union, “Regulation (EU) 2022/2065 of the European Parliament and of the Council of 19 October 2022 on a Single Market for Digital Services and amending Directive 2000/31/EC (Digital Services Act), Art. 40,” Official Journal of the European Union, L 277, pp. 1–102, 2022.

[13] R. Creemers, & G. Webster, Translation: Personal Information Protection Law of the People’s Republic of China – Effective Nov. 1, 2021, 2021. [Online]. Available: https://digichina.stanford.edu/work/translation-personal-information-pro...

[14] Ministry of Law and Justice (Legislative Department), Government of India, The Digital Personal Data Protection Act, 2023 (No. 22 of 2023). [Online]. Available: https://www.meity.gov.in/static/uploads/2024/06/2bf1f0e9f04e6fb4f8fef35e...

[15] D. Solans Noguero, D. Ramírez-Cifuentes, E. A. Ríssola, and A. Freire, “Gender bias when using artificial intelligence to assess anorexia nervosa on

social media: data-driven study,” Journal of Medical Internet Research, vol. 25, 2023, doi: 10.2196/45184.

[16] S. Rai et al., “Key language markers of

depression on

social media depend on race,” Proceedings of the National Academy of Sciences, vol. 121, no. 14, p. e2319837121, 2024, doi: 10.1073/pnas.2319837121.

[17] T. Miller, “Explanation in artificial intelligence: insights from the social sciences,” Artificial Intelligence, vol. 267, pp. 1–38, 2019, doi: 10.1016/j.artint.2018.07.007.

[18] Y. Zhao et al., “Biases in using

social media data for public health surveillance: A scoping review,” International Journal of Medical Informatics, vol. 164, 2022, doi: 10.1016/j.ijmedinf.2022.104804.

Figures Sources

Fig. 0 SOURCE: Photo by mikoto.raw Photographer from Pexels: https://www.pexels.com/photo/photo-of-woman-using-mobile-phone-3367850/

Fig. 1 SOURCE: Created by the authors.

Fig. 2 SOURCE: Photo by Andrea Piacquadio from Pexels: https://www.pexels.com/photo/young-woman-using-laptop-at-home-3807747/

Fig. 3 SOURCE: Photo by Anna Shvets from Pexels: https://www.pexels.com/photo/elderly-happy-women-browsing-internet-while...

Fig. 4 SOURCE: Photo by Oladimeji Ajegbile from Pexels: https://www.pexels.com/photo/man-in-white-crew-neck-top-reaching-for-the...